-

+ 1. Use a logging library that writes to stderr or files

-The Inspector provides several features for interacting with your MCP server:

+ ### System requirements

-### Server connection pane

+ * [.NET 8 SDK](https://dotnet.microsoft.com/download/dotnet/8.0) or higher installed.

-* Allows selecting the [transport](/docs/concepts/transports) for connecting to the server

-* For local servers, supports customizing the command-line arguments and environment

+ ### Set up your environment

-### Resources tab

+ First, let's install `dotnet` if you haven't already. You can download `dotnet` from [official Microsoft .NET website](https://dotnet.microsoft.com/download/). Verify your `dotnet` installation:

-* Lists all available resources

-* Shows resource metadata (MIME types, descriptions)

-* Allows resource content inspection

-* Supports subscription testing

+ ```bash theme={null}

+ dotnet --version

+ ```

-### Prompts tab

+ Now, let's create and set up your project:

-* Displays available prompt templates

-* Shows prompt arguments and descriptions

-* Enables prompt testing with custom arguments

-* Previews generated messages

+

-

+ 1. Use a logging library that writes to stderr or files

-The Inspector provides several features for interacting with your MCP server:

+ ### System requirements

-### Server connection pane

+ * [.NET 8 SDK](https://dotnet.microsoft.com/download/dotnet/8.0) or higher installed.

-* Allows selecting the [transport](/docs/concepts/transports) for connecting to the server

-* For local servers, supports customizing the command-line arguments and environment

+ ### Set up your environment

-### Resources tab

+ First, let's install `dotnet` if you haven't already. You can download `dotnet` from [official Microsoft .NET website](https://dotnet.microsoft.com/download/). Verify your `dotnet` installation:

-* Lists all available resources

-* Shows resource metadata (MIME types, descriptions)

-* Allows resource content inspection

-* Supports subscription testing

+ ```bash theme={null}

+ dotnet --version

+ ```

-### Prompts tab

+ Now, let's create and set up your project:

-* Displays available prompt templates

-* Shows prompt arguments and descriptions

-* Enables prompt testing with custom arguments

-* Previews generated messages

+  -

+ ## Building your server

-MCP creates a bridge between your AI applications and your data through a straightforward system:

+ ### Importing packages and constants

-* **MCP servers** connect to your data sources and tools (like Google Drive or Slack)

-* **MCP clients** are run by AI applications (like Claude Desktop) to connect them to these servers

-* When you give permission, your AI application discovers available MCP servers

-* The AI model can then use these connections to read information and take actions

+ Open `src/main.rs` and add these imports and constants at the top:

-This modular system means new capabilities can be added without changing AI applications themselves—just like adding new accessories to your computer without upgrading your entire system.

+ ```rust theme={null}

+ use anyhow::Result;

+ use rmcp::{

+ ServerHandler, ServiceExt,

+ handler::server::{router::tool::ToolRouter, tool::Parameters},

+ model::*,

+ schemars, tool, tool_handler, tool_router,

+ };

+ use serde::Deserialize;

+ use serde::de::DeserializeOwned;

-## Who creates and maintains MCP servers?

+ const NWS_API_BASE: &str = "https://api.weather.gov";

+ const USER_AGENT: &str = "weather-app/1.0";

+ ```

-MCP servers are developed and maintained by:

+ The `rmcp` crate provides the Model Context Protocol SDK for Rust, with features for server implementation, procedural macros, and stdio transport.

-* Developers at Anthropic who build servers for common tools and data sources

-* Open source contributors who create servers for tools they use

-* Enterprise development teams building servers for their internal systems

-* Software providers making their applications AI-ready

+ ### Data structures

-Once an open source MCP server is created for a data source, it can be used by any MCP-compatible AI application, creating a growing ecosystem of connections. See our [list of example servers](https://modelcontextprotocol.io/examples), or [get started building your own server](https://modelcontextprotocol.io/quickstart/server).

+ Next, let's define the data structures for deserializing responses from the National Weather Service API:

+ ```rust theme={null}

+ #[derive(Debug, Deserialize)]

+ struct AlertsResponse {

+ features: Vec

-

+ ## Building your server

-MCP creates a bridge between your AI applications and your data through a straightforward system:

+ ### Importing packages and constants

-* **MCP servers** connect to your data sources and tools (like Google Drive or Slack)

-* **MCP clients** are run by AI applications (like Claude Desktop) to connect them to these servers

-* When you give permission, your AI application discovers available MCP servers

-* The AI model can then use these connections to read information and take actions

+ Open `src/main.rs` and add these imports and constants at the top:

-This modular system means new capabilities can be added without changing AI applications themselves—just like adding new accessories to your computer without upgrading your entire system.

+ ```rust theme={null}

+ use anyhow::Result;

+ use rmcp::{

+ ServerHandler, ServiceExt,

+ handler::server::{router::tool::ToolRouter, tool::Parameters},

+ model::*,

+ schemars, tool, tool_handler, tool_router,

+ };

+ use serde::Deserialize;

+ use serde::de::DeserializeOwned;

-## Who creates and maintains MCP servers?

+ const NWS_API_BASE: &str = "https://api.weather.gov";

+ const USER_AGENT: &str = "weather-app/1.0";

+ ```

-MCP servers are developed and maintained by:

+ The `rmcp` crate provides the Model Context Protocol SDK for Rust, with features for server implementation, procedural macros, and stdio transport.

-* Developers at Anthropic who build servers for common tools and data sources

-* Open source contributors who create servers for tools they use

-* Enterprise development teams building servers for their internal systems

-* Software providers making their applications AI-ready

+ ### Data structures

-Once an open source MCP server is created for a data source, it can be used by any MCP-compatible AI application, creating a growing ecosystem of connections. See our [list of example servers](https://modelcontextprotocol.io/examples), or [get started building your own server](https://modelcontextprotocol.io/quickstart/server).

+ Next, let's define the data structures for deserializing responses from the National Weather Service API:

+ ```rust theme={null}

+ #[derive(Debug, Deserialize)]

+ struct AlertsResponse {

+ features: Vec +

+

+ Server Time:

+ Loading...

+

-

-

-

-  -

+### Base Protocol

-

-

-

+### Base Protocol

-

-  -

+* [JSON-RPC](https://www.jsonrpc.org/) message format

+* Stateful connections

+* Server and client capability negotiation

-

-

+* [JSON-RPC](https://www.jsonrpc.org/) message format

+* Stateful connections

+* Server and client capability negotiation

-A response to a request that indicates an error occurred.

Refers to any valid JSON-RPC object that can be decoded off the wire, or encoded to be sent.

A notification which does not expect a response.

A request that expects a response.

A response to a request, containing either the result or error.

A successful (non-error) response to a request.

Optional annotations for the client. The client can use annotations to inform how objects are used or displayed

Describes who the intended audience of this object or data is.

It can include multiple entries to indicate content useful for multiple audiences (e.g., \["user", "assistant"]).

Describes how important this data is for operating the server.

A value of 1 means "most important," and indicates that the data is + effectively required, while 0 means "least important," and indicates that + the data is entirely optional.

The moment the resource was last modified, as an ISO 8601 formatted string.

Should be an ISO 8601 formatted string (e.g., "2025-01-12T15:00:58Z").

Examples: last activity timestamp in an open file, timestamp when the resource + was attached, etc.

An opaque token used to represent a cursor for pagination.

A response that indicates success but carries no data.

The error type that occurred.

A short description of the error. The message SHOULD be limited to a concise single sentence.

Additional information about the error. The value of this member is defined by the sender (e.g. detailed error information, nested errors etc.).

An optionally-sized icon that can be displayed in a user interface.

A standard URI pointing to an icon resource. May be an HTTP/HTTPS URL or a data: URI with Base64-encoded image data.

Consumers SHOULD takes steps to ensure URLs serving icons are from the + same domain as the client/server or a trusted domain.

Consumers SHOULD take appropriate precautions when consuming SVGs as they can contain + executable JavaScript.

Optional MIME type override if the source MIME type is missing or generic.

+ For example: "image/png", "image/jpeg", or "image/svg+xml".

Optional array of strings that specify sizes at which the icon can be used.

+ Each string should be in WxH format (e.g., "48x48", "96x96") or "any" for scalable formats like SVG.

If not provided, the client should assume that the icon can be used at any size.

Optional specifier for the theme this icon is designed for. light indicates

+ the icon is designed to be used with a light background, and dark indicates

+ the icon is designed to be used with a dark background.

If not provided, the client should assume the icon can be used with any theme.

The severity of a log message.

These map to syslog message severities, as specified in RFC-5424: [https://datatracker.ietf.org/doc/html/rfc5424#section-6.2.1](https://datatracker.ietf.org/doc/html/rfc5424#section-6.2.1)

A progress token, used to associate progress notifications with the original request.

A uniquely identifying ID for a request in JSON-RPC.

See General fields: \_meta for notes on \_meta usage.

The sender or recipient of messages and data in a conversation.

Audio provided to or from an LLM.

The base64-encoded audio data.

The MIME type of the audio. Different providers may support different audio types.

Optional annotations for the client.

See General fields: \_meta for notes on \_meta usage.

The URI of this resource.

The MIME type of this resource, if known.

See General fields: \_meta for notes on \_meta usage.

A base64-encoded string representing the binary data of the item.

The contents of a resource, embedded into a prompt or tool call result.

It is up to the client how best to render embedded resources for the benefit + of the LLM and/or the user.

Optional annotations for the client.

See General fields: \_meta for notes on \_meta usage.

An image provided to or from an LLM.

The base64-encoded image data.

The MIME type of the image. Different providers may support different image types.

Optional annotations for the client.

See General fields: \_meta for notes on \_meta usage.

A resource that the server is capable of reading, included in a prompt or tool call result.

Note: resource links returned by tools are not guaranteed to appear in the results of resources/list requests.

Optional set of sized icons that the client can display in a user interface.

Clients that support rendering icons MUST support at least the following MIME types:

image/png - PNG images (safe, universal compatibility)image/jpeg (and image/jpg) - JPEG images (safe, universal compatibility)Clients that support rendering icons SHOULD also support:

image/svg+xml - SVG images (scalable but requires security precautions)image/webp - WebP images (modern, efficient format)Intended for programmatic or logical use, but used as a display name in past specs or fallback (if title isn't present).

Intended for UI and end-user contexts — optimized to be human-readable and easily understood, + even by those unfamiliar with domain-specific terminology.

If not provided, the name should be used for display (except for Tool,

+ where annotations.title should be given precedence over using name,

+ if present).

The URI of this resource.

A description of what this resource represents.

This can be used by clients to improve the LLM's understanding of available resources. It can be thought of like a "hint" to the model.

The MIME type of this resource, if known.

Optional annotations for the client.

The size of the raw resource content, in bytes (i.e., before base64 encoding or any tokenization), if known.

This can be used by Hosts to display file sizes and estimate context window usage.

See General fields: \_meta for notes on \_meta usage.

Text provided to or from an LLM.

The text content of the message.

Optional annotations for the client.

See General fields: \_meta for notes on \_meta usage.

The URI of this resource.

The MIME type of this resource, if known.

See General fields: \_meta for notes on \_meta usage.

The text of the item. This must only be set if the item can actually be represented as text (not binary data).

A request from the client to the server, to ask for completion options.

Parameters for a completion/complete request.

See General fields: \_meta for notes on \_meta usage.

If specified, the caller is requesting out-of-band progress notifications for this request (as represented by notifications/progress). The value of this parameter is an opaque token that will be attached to any subsequent notifications. The receiver is not obligated to provide these notifications.

The argument's information

The name of the argument

The value of the argument to use for completion matching.

Additional, optional context for completions

Optionalarguments?: \{ \[key: string]: string }Previously-resolved variables in a URI template or prompt.

The server's response to a completion/complete request

See General fields: \_meta for notes on \_meta usage.

An array of completion values. Must not exceed 100 items.

Optionaltotal?: numberThe total number of completion options available. This can exceed the number of values actually sent in the response.

OptionalhasMore?: booleanIndicates whether there are additional completion options beyond those provided in the current response, even if the exact total is unknown.

Identifies a prompt.

Intended for programmatic or logical use, but used as a display name in past specs or fallback (if title isn't present).

Intended for UI and end-user contexts — optimized to be human-readable and easily understood, + even by those unfamiliar with domain-specific terminology.

If not provided, the name should be used for display (except for Tool,

+ where annotations.title should be given precedence over using name,

+ if present).

A request from the server to elicit additional information from the user via the client.

The parameters for a request to elicit additional information from the user via the client.

The client's response to an elicitation request.

See General fields: \_meta for notes on \_meta usage.

The user action in response to the elicitation.

The submitted form data, only present when action is "accept" and mode was "form". + Contains values matching the requested schema. + Omitted for out-of-band mode responses.

The parameters for a request to elicit non-sensitive information from the user via a form in the client.

If specified, the caller is requesting task-augmented execution for this request. + The request will return a CreateTaskResult immediately, and the actual result can be + retrieved later via tasks/result.

Task augmentation is subject to capability negotiation - receivers MUST declare support + for task augmentation of specific request types in their capabilities.

See General fields: \_meta for notes on \_meta usage.

If specified, the caller is requesting out-of-band progress notifications for this request (as represented by notifications/progress). The value of this parameter is an opaque token that will be attached to any subsequent notifications. The receiver is not obligated to provide these notifications.

The elicitation mode.

The message to present to the user describing what information is being requested.

A restricted subset of JSON Schema. + Only top-level properties are allowed, without nesting.

The parameters for a request to elicit information from the user via a URL in the client.

If specified, the caller is requesting task-augmented execution for this request. + The request will return a CreateTaskResult immediately, and the actual result can be + retrieved later via tasks/result.

Task augmentation is subject to capability negotiation - receivers MUST declare support + for task augmentation of specific request types in their capabilities.

See General fields: \_meta for notes on \_meta usage.

If specified, the caller is requesting out-of-band progress notifications for this request (as represented by notifications/progress). The value of this parameter is an opaque token that will be attached to any subsequent notifications. The receiver is not obligated to provide these notifications.

The elicitation mode.

The message to present to the user explaining why the interaction is needed.

The ID of the elicitation, which must be unique within the context of the server. + The client MUST treat this ID as an opaque value.

The URL that the user should navigate to.

Use TitledSingleSelectEnumSchema instead. + This interface will be removed in a future version.

(Legacy) Display names for enum values. + Non-standard according to JSON schema 2020-12.

Restricted schema definitions that only allow primitive types + without nested objects or arrays.

Schema for multiple-selection enumeration with display titles for each option.

Optional title for the enum field.

Optional description for the enum field.

Minimum number of items to select.

Maximum number of items to select.

Schema for array items with enum options and display labels.

Array of enum options with values and display labels.

Optional default value.

Schema for single-selection enumeration with display titles for each option.

Optional title for the enum field.

Optional description for the enum field.

Array of enum options with values and display labels.

The enum value.

Display label for this option.

Optional default value.

Schema for multiple-selection enumeration without display titles for options.

Optional title for the enum field.

Optional description for the enum field.

Minimum number of items to select.

Maximum number of items to select.

Schema for the array items.

Array of enum values to choose from.

Optional default value.

Schema for single-selection enumeration without display titles for options.

Optional title for the enum field.

Optional description for the enum field.

Array of enum values to choose from.

Optional default value.

This request is sent from the client to the server when it first connects, asking it to begin initialization.

Parameters for an initialize request.

See General fields: \_meta for notes on \_meta usage.

If specified, the caller is requesting out-of-band progress notifications for this request (as represented by notifications/progress). The value of this parameter is an opaque token that will be attached to any subsequent notifications. The receiver is not obligated to provide these notifications.

The latest version of the Model Context Protocol that the client supports. The client MAY decide to support older versions as well.

After receiving an initialize request from the client, the server sends this response.

See General fields: \_meta for notes on \_meta usage.

The version of the Model Context Protocol that the server wants to use. This may not match the version that the client requested. If the client cannot support this version, it MUST disconnect.

Instructions describing how to use the server and its features.

This can be used by clients to improve the LLM's understanding of available tools, resources, etc. It can be thought of like a "hint" to the model. For example, this information MAY be added to the system prompt.

Capabilities a client may support. Known capabilities are defined here, in this schema, but this is not a closed set: any client can define its own, additional capabilities.

Experimental, non-standard capabilities that the client supports.

Present if the client supports listing roots.

OptionallistChanged?: booleanWhether the client supports notifications for changes to the roots list.

Present if the client supports sampling from an LLM.

Optionalcontext?: objectWhether the client supports context inclusion via includeContext parameter.

+ If not declared, servers SHOULD only use includeContext: "none" (or omit it).

Optionaltools?: objectWhether the client supports tool use via tools and toolChoice parameters.

Present if the client supports elicitation from the server.

Present if the client supports task-augmented requests.

Optionallist?: objectWhether this client supports tasks/list.

Optionalcancel?: objectWhether this client supports tasks/cancel.

Optionalrequests?: \{ sampling?: \{ createMessage?: object }; elicitation?: \{ create?: object } }Specifies which request types can be augmented with tasks.

Optionalsampling?: \{ createMessage?: object }Task support for sampling-related requests.

OptionalcreateMessage?: objectWhether the client supports task-augmented sampling/createMessage requests.

Optionalelicitation?: \{ create?: object }Task support for elicitation-related requests.

Optionalcreate?: objectWhether the client supports task-augmented elicitation/create requests.

Describes the MCP implementation.

Optional set of sized icons that the client can display in a user interface.

Clients that support rendering icons MUST support at least the following MIME types:

image/png - PNG images (safe, universal compatibility)image/jpeg (and image/jpg) - JPEG images (safe, universal compatibility)Clients that support rendering icons SHOULD also support:

image/svg+xml - SVG images (scalable but requires security precautions)image/webp - WebP images (modern, efficient format)Intended for programmatic or logical use, but used as a display name in past specs or fallback (if title isn't present).

Intended for UI and end-user contexts — optimized to be human-readable and easily understood, + even by those unfamiliar with domain-specific terminology.

If not provided, the name should be used for display (except for Tool,

+ where annotations.title should be given precedence over using name,

+ if present).

An optional human-readable description of what this implementation does.

This can be used by clients or servers to provide context about their purpose + and capabilities. For example, a server might describe the types of resources + or tools it provides, while a client might describe its intended use case.

An optional URL of the website for this implementation.

Capabilities that a server may support. Known capabilities are defined here, in this schema, but this is not a closed set: any server can define its own, additional capabilities.

Experimental, non-standard capabilities that the server supports.

Present if the server supports sending log messages to the client.

Present if the server supports argument autocompletion suggestions.

Present if the server offers any prompt templates.

OptionallistChanged?: booleanWhether this server supports notifications for changes to the prompt list.

Present if the server offers any resources to read.

Optionalsubscribe?: booleanWhether this server supports subscribing to resource updates.

OptionallistChanged?: booleanWhether this server supports notifications for changes to the resource list.

Present if the server offers any tools to call.

OptionallistChanged?: booleanWhether this server supports notifications for changes to the tool list.

Present if the server supports task-augmented requests.

Optionallist?: objectWhether this server supports tasks/list.

Optionalcancel?: objectWhether this server supports tasks/cancel.

Optionalrequests?: \{ tools?: \{ call?: object } }Specifies which request types can be augmented with tasks.

Optionaltools?: \{ call?: object }Task support for tool-related requests.

Optionalcall?: objectWhether the server supports task-augmented tools/call requests.

A request from the client to the server, to enable or adjust logging.

Parameters for a logging/setLevel request.

See General fields: \_meta for notes on \_meta usage.

If specified, the caller is requesting out-of-band progress notifications for this request (as represented by notifications/progress). The value of this parameter is an opaque token that will be attached to any subsequent notifications. The receiver is not obligated to provide these notifications.

The level of logging that the client wants to receive from the server. The server should send all logs at this level and higher (i.e., more severe) to the client as notifications/message.

This notification can be sent by either side to indicate that it is cancelling a previously-issued request.

The request SHOULD still be in-flight, but due to communication latency, it is always possible that this notification MAY arrive after the request has already finished.

This notification indicates that the result will be unused, so any associated processing SHOULD cease.

A client MUST NOT attempt to cancel its initialize request.

For task cancellation, use the tasks/cancel request instead of this notification.

Parameters for a notifications/cancelled notification.

See General fields: \_meta for notes on \_meta usage.

The ID of the request to cancel.

This MUST correspond to the ID of a request previously issued in the same direction.

+ This MUST be provided for cancelling non-task requests.

+ This MUST NOT be used for cancelling tasks (use the tasks/cancel request instead).

An optional string describing the reason for the cancellation. This MAY be logged or presented to the user.

This notification is sent from the client to the server after initialization has finished.

An optional notification from the receiver to the requestor, informing them that a task's status has changed. Receivers are not required to send these notifications.

Parameters for a notifications/tasks/status notification.

JSONRPCNotification of a log message passed from server to client. If no logging/setLevel request has been sent from the client, the server MAY decide which messages to send automatically.

Parameters for a notifications/message notification.

See General fields: \_meta for notes on \_meta usage.

The severity of this log message.

An optional name of the logger issuing this message.

The data to be logged, such as a string message or an object. Any JSON serializable type is allowed here.

An out-of-band notification used to inform the receiver of a progress update for a long-running request.

Parameters for a notifications/progress notification.

See General fields: \_meta for notes on \_meta usage.

The progress token which was given in the initial request, used to associate this notification with the request that is proceeding.

The progress thus far. This should increase every time progress is made, even if the total is unknown.

Total number of items to process (or total progress required), if known.

An optional message describing the current progress.

An optional notification from the server to the client, informing it that the list of prompts it offers has changed. This may be issued by servers without any previous subscription from the client.

An optional notification from the server to the client, informing it that the list of resources it can read from has changed. This may be issued by servers without any previous subscription from the client.

A notification from the server to the client, informing it that a resource has changed and may need to be read again. This should only be sent if the client previously sent a resources/subscribe request.

Parameters for a notifications/resources/updated notification.

See General fields: \_meta for notes on \_meta usage.

The URI of the resource that has been updated. This might be a sub-resource of the one that the client actually subscribed to.

A notification from the client to the server, informing it that the list of roots has changed. + This notification should be sent whenever the client adds, removes, or modifies any root. + The server should then request an updated list of roots using the ListRootsRequest.

An optional notification from the server to the client, informing it that the list of tools it offers has changed. This may be issued by servers without any previous subscription from the client.

An optional notification from the server to the client, informing it of a completion of a out-of-band elicitation request.

The ID of the elicitation that completed.

A ping, issued by either the server or the client, to check that the other party is still alive. The receiver must promptly respond, or else may be disconnected.

A response to a task-augmented request.

See General fields: \_meta for notes on \_meta usage.

Metadata for associating messages with a task.

+ Include this in the \_meta field under the key io.modelcontextprotocol/related-task.

The task identifier this message is associated with.

Data associated with a task.

The task identifier.

Current task state.

Optional human-readable message describing the current task state. + This can provide context for any status, including:

ISO 8601 timestamp when the task was created.

ISO 8601 timestamp when the task was last updated.

Actual retention duration from creation in milliseconds, null for unlimited.

Suggested polling interval in milliseconds.

Metadata for augmenting a request with task execution.

+ Include this in the task field of the request parameters.

Requested duration in milliseconds to retain task from creation.

The status of a task.

A request to retrieve the state of a task.

The task identifier to query.

The response to a tasks/get request.

A request to retrieve the result of a completed task.

The task identifier to retrieve results for.

The response to a tasks/result request. + The structure matches the result type of the original request. + For example, a tools/call task would return the CallToolResult structure.

See General fields: \_meta for notes on \_meta usage.

A request to retrieve a list of tasks.

The response to a tasks/list request.

See General fields: \_meta for notes on \_meta usage.

An opaque token representing the pagination position after the last returned result. + If present, there may be more results available.

A request to cancel a task.

The task identifier to cancel.

The response to a tasks/cancel request.

Used by the client to get a prompt provided by the server.

Parameters for a prompts/get request.

See General fields: \_meta for notes on \_meta usage.

If specified, the caller is requesting out-of-band progress notifications for this request (as represented by notifications/progress). The value of this parameter is an opaque token that will be attached to any subsequent notifications. The receiver is not obligated to provide these notifications.

The name of the prompt or prompt template.

Arguments to use for templating the prompt.

The server's response to a prompts/get request from the client.

See General fields: \_meta for notes on \_meta usage.

An optional description for the prompt.

Describes a message returned as part of a prompt.

This is similar to SamplingMessage, but also supports the embedding of

+ resources from the MCP server.

Sent from the client to request a list of prompts and prompt templates the server has.

The server's response to a prompts/list request from the client.

See General fields: \_meta for notes on \_meta usage.

An opaque token representing the pagination position after the last returned result. + If present, there may be more results available.

A prompt or prompt template that the server offers.

Optional set of sized icons that the client can display in a user interface.

Clients that support rendering icons MUST support at least the following MIME types:

image/png - PNG images (safe, universal compatibility)image/jpeg (and image/jpg) - JPEG images (safe, universal compatibility)Clients that support rendering icons SHOULD also support:

image/svg+xml - SVG images (scalable but requires security precautions)image/webp - WebP images (modern, efficient format)Intended for programmatic or logical use, but used as a display name in past specs or fallback (if title isn't present).

Intended for UI and end-user contexts — optimized to be human-readable and easily understood, + even by those unfamiliar with domain-specific terminology.

If not provided, the name should be used for display (except for Tool,

+ where annotations.title should be given precedence over using name,

+ if present).

An optional description of what this prompt provides

A list of arguments to use for templating the prompt.

See General fields: \_meta for notes on \_meta usage.

Describes an argument that a prompt can accept.

Intended for programmatic or logical use, but used as a display name in past specs or fallback (if title isn't present).

Intended for UI and end-user contexts — optimized to be human-readable and easily understood, + even by those unfamiliar with domain-specific terminology.

If not provided, the name should be used for display (except for Tool,

+ where annotations.title should be given precedence over using name,

+ if present).

A human-readable description of the argument.

Whether this argument must be provided.

Sent from the client to request a list of resources the server has.

The server's response to a resources/list request from the client.

See General fields: \_meta for notes on \_meta usage.

An opaque token representing the pagination position after the last returned result. + If present, there may be more results available.

A known resource that the server is capable of reading.

Optional set of sized icons that the client can display in a user interface.

Clients that support rendering icons MUST support at least the following MIME types:

image/png - PNG images (safe, universal compatibility)image/jpeg (and image/jpg) - JPEG images (safe, universal compatibility)Clients that support rendering icons SHOULD also support:

image/svg+xml - SVG images (scalable but requires security precautions)image/webp - WebP images (modern, efficient format)Intended for programmatic or logical use, but used as a display name in past specs or fallback (if title isn't present).

Intended for UI and end-user contexts — optimized to be human-readable and easily understood, + even by those unfamiliar with domain-specific terminology.

If not provided, the name should be used for display (except for Tool,

+ where annotations.title should be given precedence over using name,

+ if present).

The URI of this resource.

A description of what this resource represents.

This can be used by clients to improve the LLM's understanding of available resources. It can be thought of like a "hint" to the model.

The MIME type of this resource, if known.

Optional annotations for the client.

The size of the raw resource content, in bytes (i.e., before base64 encoding or any tokenization), if known.

This can be used by Hosts to display file sizes and estimate context window usage.

See General fields: \_meta for notes on \_meta usage.

Sent from the client to the server, to read a specific resource URI.

Parameters for a resources/read request.

See General fields: \_meta for notes on \_meta usage.

If specified, the caller is requesting out-of-band progress notifications for this request (as represented by notifications/progress). The value of this parameter is an opaque token that will be attached to any subsequent notifications. The receiver is not obligated to provide these notifications.

The URI of the resource. The URI can use any protocol; it is up to the server how to interpret it.

The server's response to a resources/read request from the client.

See General fields: \_meta for notes on \_meta usage.

Sent from the client to request resources/updated notifications from the server whenever a particular resource changes.

Parameters for a resources/subscribe request.

See General fields: \_meta for notes on \_meta usage.

If specified, the caller is requesting out-of-band progress notifications for this request (as represented by notifications/progress). The value of this parameter is an opaque token that will be attached to any subsequent notifications. The receiver is not obligated to provide these notifications.

The URI of the resource. The URI can use any protocol; it is up to the server how to interpret it.

Sent from the client to request a list of resource templates the server has.

The server's response to a resources/templates/list request from the client.

See General fields: \_meta for notes on \_meta usage.

An opaque token representing the pagination position after the last returned result. + If present, there may be more results available.

A template description for resources available on the server.

Optional set of sized icons that the client can display in a user interface.

Clients that support rendering icons MUST support at least the following MIME types:

image/png - PNG images (safe, universal compatibility)image/jpeg (and image/jpg) - JPEG images (safe, universal compatibility)Clients that support rendering icons SHOULD also support:

image/svg+xml - SVG images (scalable but requires security precautions)image/webp - WebP images (modern, efficient format)Intended for programmatic or logical use, but used as a display name in past specs or fallback (if title isn't present).

Intended for UI and end-user contexts — optimized to be human-readable and easily understood, + even by those unfamiliar with domain-specific terminology.

If not provided, the name should be used for display (except for Tool,

+ where annotations.title should be given precedence over using name,

+ if present).

A URI template (according to RFC 6570) that can be used to construct resource URIs.

A description of what this template is for.

This can be used by clients to improve the LLM's understanding of available resources. It can be thought of like a "hint" to the model.

The MIME type for all resources that match this template. This should only be included if all resources matching this template have the same type.

Optional annotations for the client.

See General fields: \_meta for notes on \_meta usage.

Sent from the client to request cancellation of resources/updated notifications from the server. This should follow a previous resources/subscribe request.

Parameters for a resources/unsubscribe request.

See General fields: \_meta for notes on \_meta usage.

If specified, the caller is requesting out-of-band progress notifications for this request (as represented by notifications/progress). The value of this parameter is an opaque token that will be attached to any subsequent notifications. The receiver is not obligated to provide these notifications.

The URI of the resource. The URI can use any protocol; it is up to the server how to interpret it.

Sent from the server to request a list of root URIs from the client. Roots allow + servers to ask for specific directories or files to operate on. A common example + for roots is providing a set of repositories or directories a server should operate + on.

This request is typically used when the server needs to understand the file system + structure or access specific locations that the client has permission to read from.

The client's response to a roots/list request from the server. + This result contains an array of Root objects, each representing a root directory + or file that the server can operate on.

See General fields: \_meta for notes on \_meta usage.

Represents a root directory or file that the server can operate on.

The URI identifying the root. This must start with file:// for now. + This restriction may be relaxed in future versions of the protocol to allow + other URI schemes.

An optional name for the root. This can be used to provide a human-readable + identifier for the root, which may be useful for display purposes or for + referencing the root in other parts of the application.

See General fields: \_meta for notes on \_meta usage.

A request from the server to sample an LLM via the client. The client has full discretion over which model to select. The client should also inform the user before beginning sampling, to allow them to inspect the request (human in the loop) and decide whether to approve it.

Parameters for a sampling/createMessage request.

If specified, the caller is requesting task-augmented execution for this request. + The request will return a CreateTaskResult immediately, and the actual result can be + retrieved later via tasks/result.

Task augmentation is subject to capability negotiation - receivers MUST declare support + for task augmentation of specific request types in their capabilities.

See General fields: \_meta for notes on \_meta usage.

If specified, the caller is requesting out-of-band progress notifications for this request (as represented by notifications/progress). The value of this parameter is an opaque token that will be attached to any subsequent notifications. The receiver is not obligated to provide these notifications.

The server's preferences for which model to select. The client MAY ignore these preferences.

An optional system prompt the server wants to use for sampling. The client MAY modify or omit this prompt.

A request to include context from one or more MCP servers (including the caller), to be attached to the prompt. + The client MAY ignore this request.

Default is "none". Values "thisServer" and "allServers" are soft-deprecated. Servers SHOULD only use these values if the client + declares ClientCapabilities.sampling.context. These values may be removed in future spec releases.

The requested maximum number of tokens to sample (to prevent runaway completions).

The client MAY choose to sample fewer tokens than the requested maximum.

Optional metadata to pass through to the LLM provider. The format of this metadata is provider-specific.

Tools that the model may use during generation. + The client MUST return an error if this field is provided but ClientCapabilities.sampling.tools is not declared.

Controls how the model uses tools.

+ The client MUST return an error if this field is provided but ClientCapabilities.sampling.tools is not declared.

+ Default is \{ mode: "auto" }.

The client's response to a sampling/createMessage request from the server. + The client should inform the user before returning the sampled message, to allow them + to inspect the response (human in the loop) and decide whether to allow the server to see it.

See General fields: \_meta for notes on \_meta usage.

The name of the model that generated the message.

The reason why sampling stopped, if known.

Standard values:

This field is an open string to allow for provider-specific stop reasons.

Hints to use for model selection.

Keys not declared here are currently left unspecified by the spec and are up + to the client to interpret.

A hint for a model name.

The client SHOULD treat this as a substring of a model name; for example:

claude-3-5-sonnet should match claude-3-5-sonnet-20241022sonnet should match claude-3-5-sonnet-20241022, claude-3-sonnet-20240229, etc.claude should match any Claude modelThe client MAY also map the string to a different provider's model name or a different model family, as long as it fills a similar niche; for example:

gemini-1.5-flash could match claude-3-haiku-20240307The server's preferences for model selection, requested of the client during sampling.

Because LLMs can vary along multiple dimensions, choosing the "best" model is + rarely straightforward. Different models excel in different areas—some are + faster but less capable, others are more capable but more expensive, and so + on. This interface allows servers to express their priorities across multiple + dimensions to help clients make an appropriate selection for their use case.

These preferences are always advisory. The client MAY ignore them. It is also + up to the client to decide how to interpret these preferences and how to + balance them against other considerations.

Optional hints to use for model selection.

If multiple hints are specified, the client MUST evaluate them in order + (such that the first match is taken).

The client SHOULD prioritize these hints over the numeric priorities, but + MAY still use the priorities to select from ambiguous matches.

How much to prioritize cost when selecting a model. A value of 0 means cost + is not important, while a value of 1 means cost is the most important + factor.

How much to prioritize sampling speed (latency) when selecting a model. A + value of 0 means speed is not important, while a value of 1 means speed is + the most important factor.

How much to prioritize intelligence and capabilities when selecting a + model. A value of 0 means intelligence is not important, while a value of 1 + means intelligence is the most important factor.

Describes a message issued to or received from an LLM API.

See General fields: \_meta for notes on \_meta usage.

Controls tool selection behavior for sampling requests.

Controls the tool use ability of the model:

The result of a tool use, provided by the user back to the assistant.

The ID of the tool use this result corresponds to.

This MUST match the ID from a previous ToolUseContent.

The unstructured result content of the tool use.

This has the same format as CallToolResult.content and can include text, images, + audio, resource links, and embedded resources.

An optional structured result object.

If the tool defined an outputSchema, this SHOULD conform to that schema.

Whether the tool use resulted in an error.

If true, the content typically describes the error that occurred. + Default: false

Optional metadata about the tool result. Clients SHOULD preserve this field when + including tool results in subsequent sampling requests to enable caching optimizations.

See General fields: \_meta for notes on \_meta usage.

A request from the assistant to call a tool.

A unique identifier for this tool use.

This ID is used to match tool results to their corresponding tool uses.

The name of the tool to call.

The arguments to pass to the tool, conforming to the tool's input schema.

Optional metadata about the tool use. Clients SHOULD preserve this field when + including tool uses in subsequent sampling requests to enable caching optimizations.

See General fields: \_meta for notes on \_meta usage.

Used by the client to invoke a tool provided by the server.

Parameters for a tools/call request.

If specified, the caller is requesting task-augmented execution for this request. + The request will return a CreateTaskResult immediately, and the actual result can be + retrieved later via tasks/result.

Task augmentation is subject to capability negotiation - receivers MUST declare support + for task augmentation of specific request types in their capabilities.

See General fields: \_meta for notes on \_meta usage.

If specified, the caller is requesting out-of-band progress notifications for this request (as represented by notifications/progress). The value of this parameter is an opaque token that will be attached to any subsequent notifications. The receiver is not obligated to provide these notifications.

The name of the tool.

Arguments to use for the tool call.

The server's response to a tool call.

See General fields: \_meta for notes on \_meta usage.

A list of content objects that represent the unstructured result of the tool call.

An optional JSON object that represents the structured result of the tool call.

Whether the tool call ended in an error.

If not set, this is assumed to be false (the call was successful).

Any errors that originate from the tool SHOULD be reported inside the result

+ object, with isError set to true, not as an MCP protocol-level error

+ response. Otherwise, the LLM would not be able to see that an error occurred

+ and self-correct.

However, any errors in finding the tool, an error indicating that the + server does not support tool calls, or any other exceptional conditions, + should be reported as an MCP error response.

Sent from the client to request a list of tools the server has.

The server's response to a tools/list request from the client.

See General fields: \_meta for notes on \_meta usage.

An opaque token representing the pagination position after the last returned result. + If present, there may be more results available.

Definition for a tool the client can call.

Optional set of sized icons that the client can display in a user interface.

Clients that support rendering icons MUST support at least the following MIME types:

image/png - PNG images (safe, universal compatibility)image/jpeg (and image/jpg) - JPEG images (safe, universal compatibility)Clients that support rendering icons SHOULD also support:

image/svg+xml - SVG images (scalable but requires security precautions)image/webp - WebP images (modern, efficient format)Intended for programmatic or logical use, but used as a display name in past specs or fallback (if title isn't present).

Intended for UI and end-user contexts — optimized to be human-readable and easily understood, + even by those unfamiliar with domain-specific terminology.

If not provided, the name should be used for display (except for Tool,

+ where annotations.title should be given precedence over using name,

+ if present).

A human-readable description of the tool.

This can be used by clients to improve the LLM's understanding of available tools. It can be thought of like a "hint" to the model.

A JSON Schema object defining the expected parameters for the tool.

Execution-related properties for this tool.

An optional JSON Schema object defining the structure of the tool's output returned in + the structuredContent field of a CallToolResult.

Defaults to JSON Schema 2020-12 when no explicit \$schema is provided. + Currently restricted to type: "object" at the root level.

Optional additional tool information.

Display name precedence order is: title, annotations.title, then name.

See General fields: \_meta for notes on \_meta usage.

Additional properties describing a Tool to clients.

NOTE: all properties in ToolAnnotations are hints.

+ They are not guaranteed to provide a faithful description of

+ tool behavior (including descriptive properties like title).

Clients should never make tool use decisions based on ToolAnnotations + received from untrusted servers.

A human-readable title for the tool.

If true, the tool does not modify its environment.

Default: false

If true, the tool may perform destructive updates to its environment. + If false, the tool performs only additive updates.

(This property is meaningful only when readOnlyHint == false)

Default: true

If true, calling the tool repeatedly with the same arguments + will have no additional effect on its environment.

(This property is meaningful only when readOnlyHint == false)

Default: false

If true, this tool may interact with an "open world" of external + entities. If false, the tool's domain of interaction is closed. + For example, the world of a web search tool is open, whereas that + of a memory tool is not.

Default: true

Execution-related properties for a tool.

Indicates whether this tool supports task-augmented execution. + This allows clients to handle long-running operations through polling + the task system.

Default: "forbidden"

-

+```json theme={null}

+{

+ "type": "text",

+ "text": "The text content of the message"

+}

+```

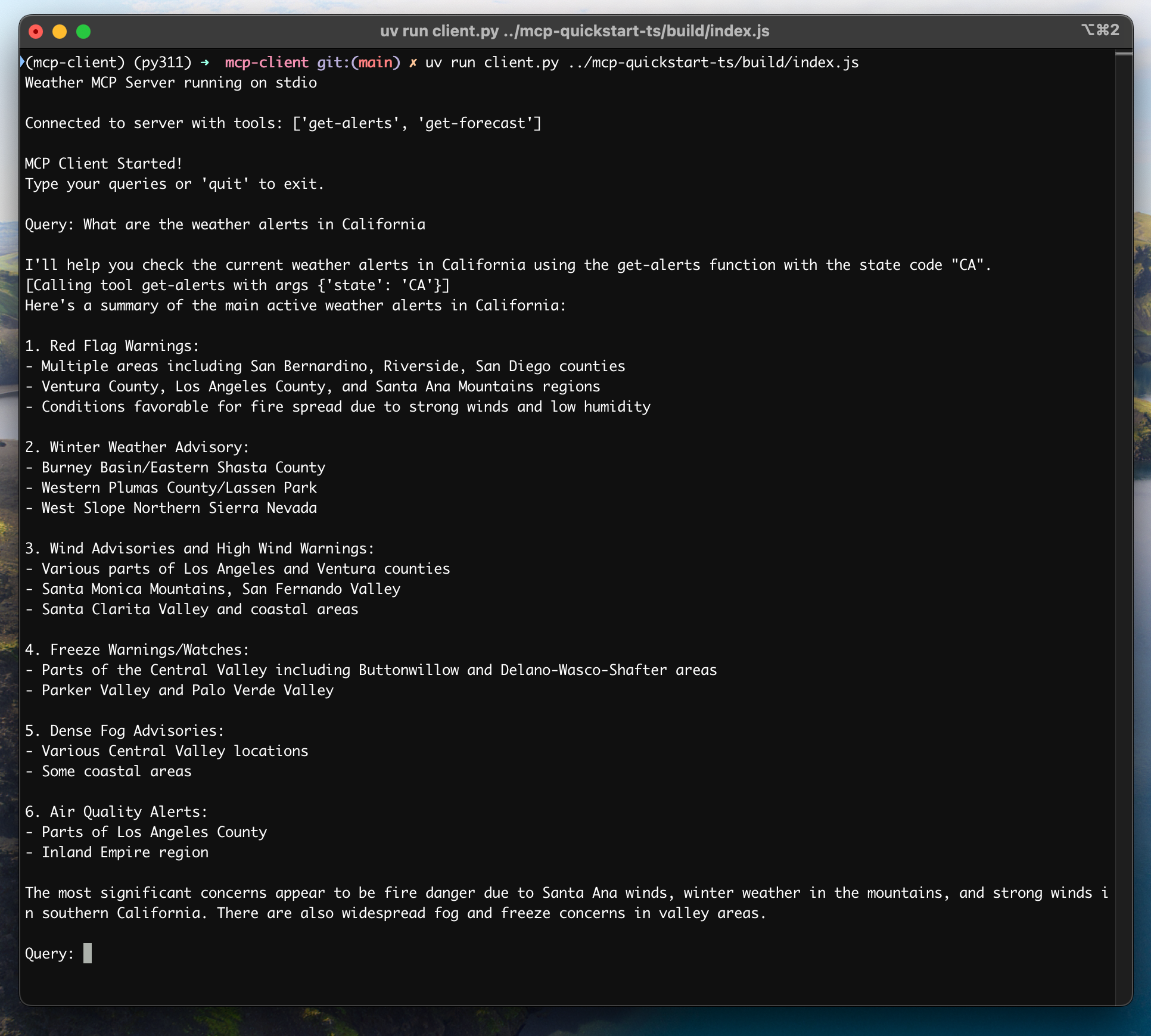

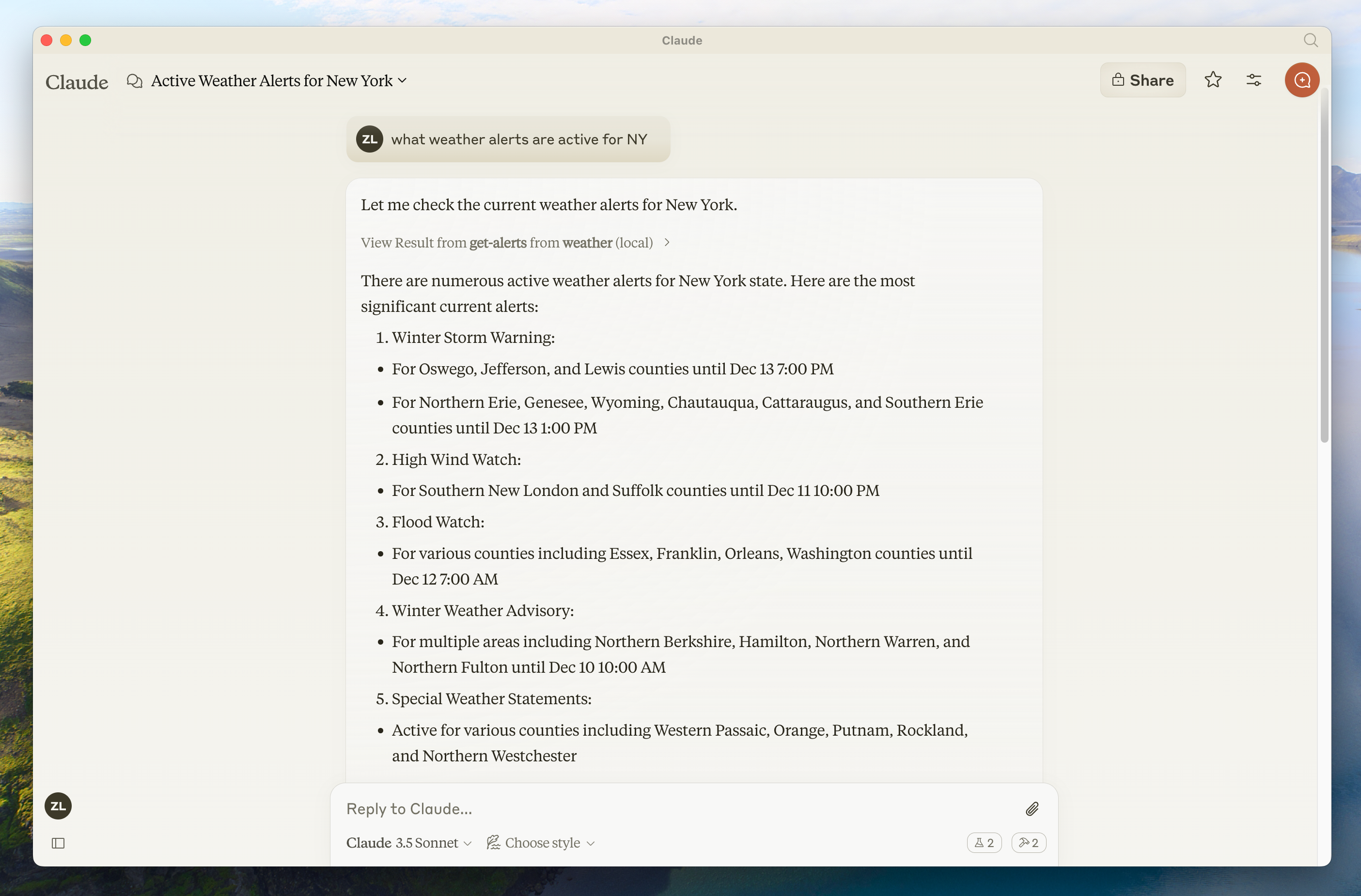

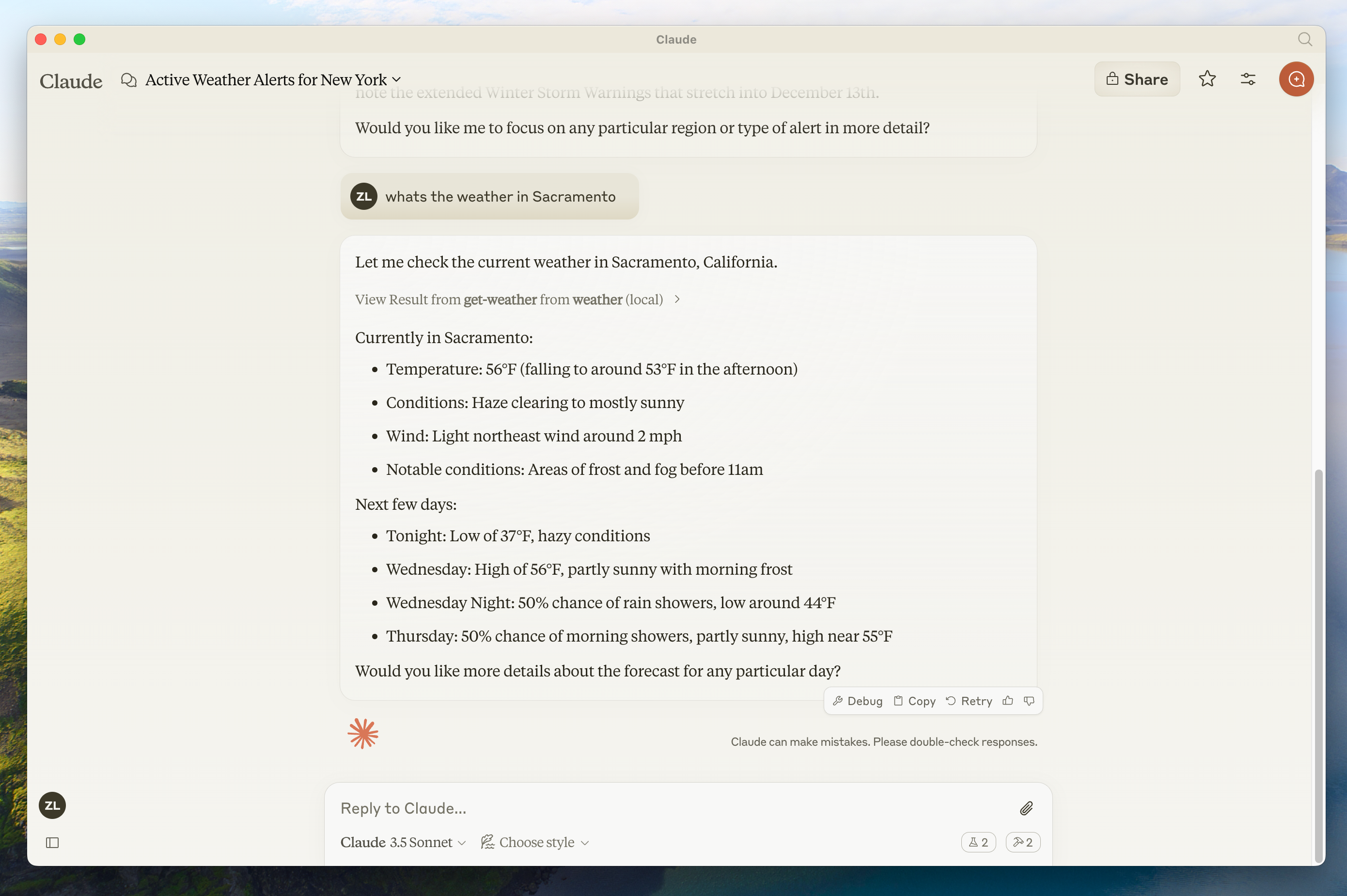

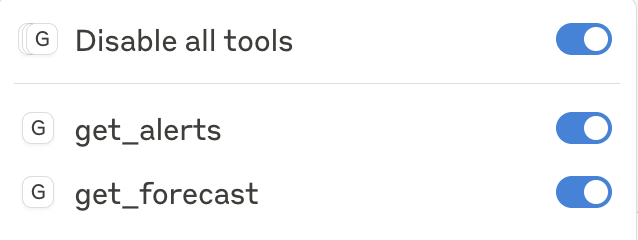

-After clicking on the hammer icon, you should see two tools listed:

+This is the most common content type used for natural language interactions.

-

-

-

+```json theme={null}

+{

+ "type": "text",

+ "text": "The text content of the message"

+}

+```

-After clicking on the hammer icon, you should see two tools listed:

+This is the most common content type used for natural language interactions.

-

-  -

+#### Image Content

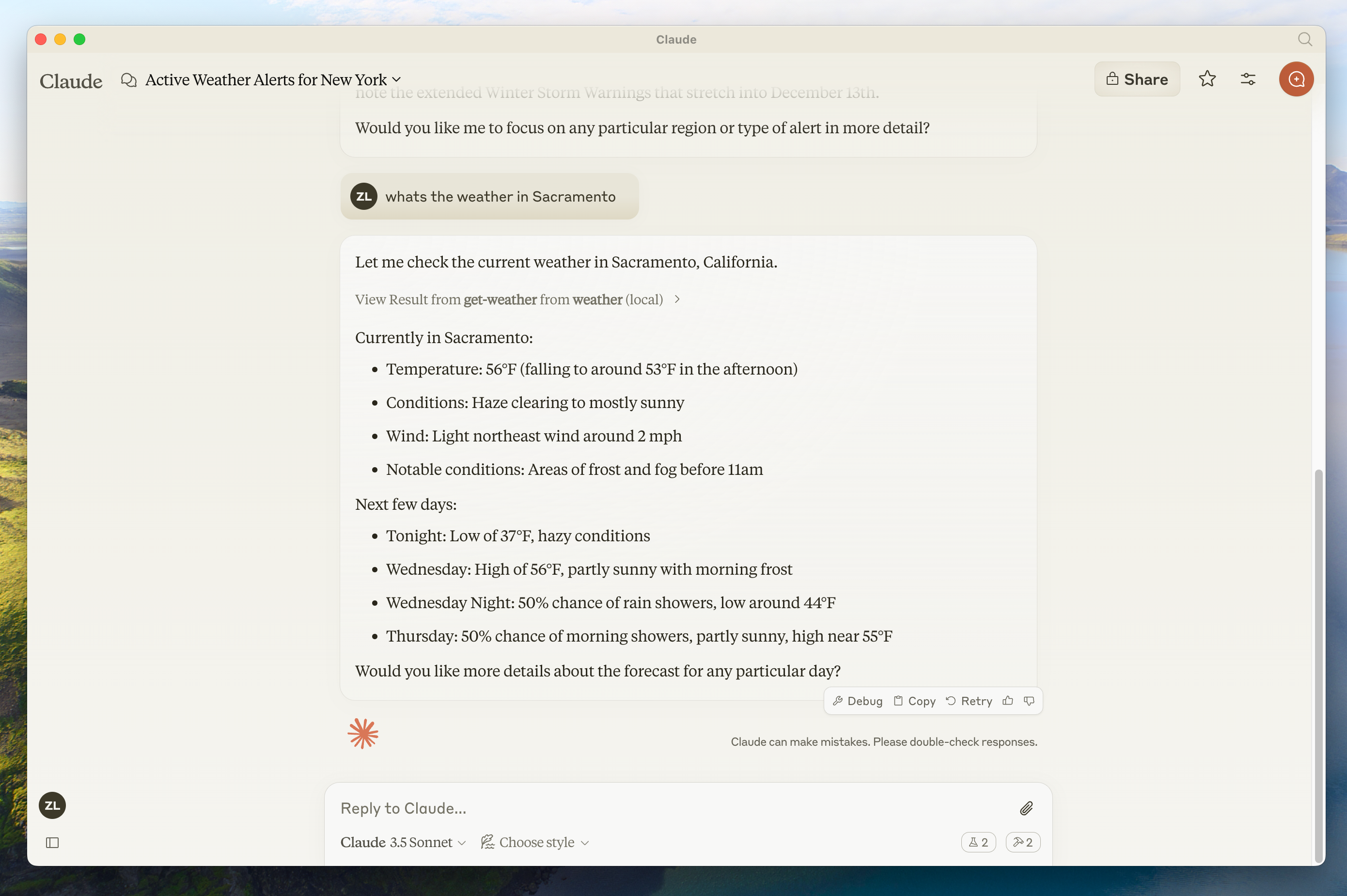

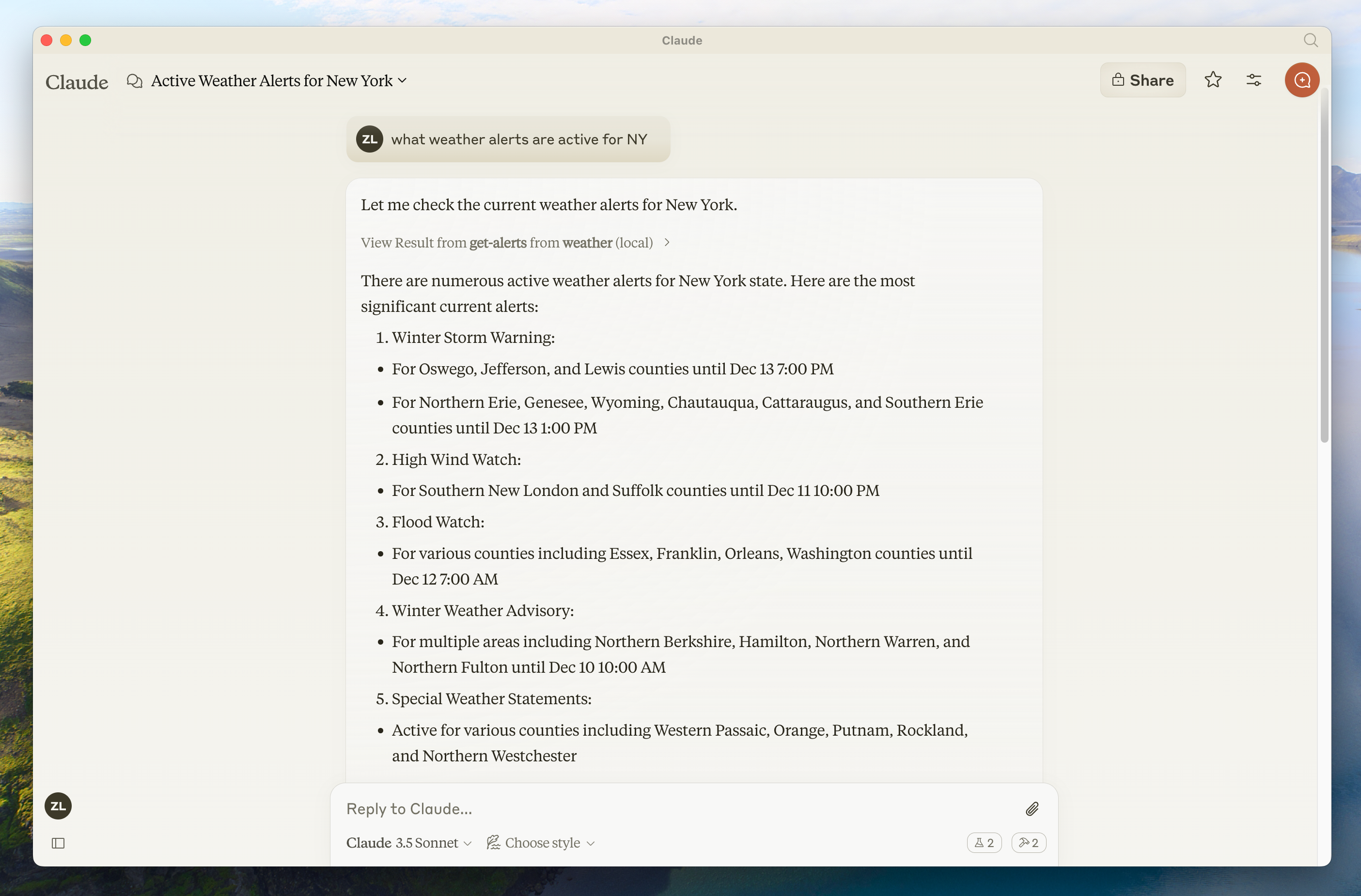

-If your server isn't being picked up by Claude for Desktop, proceed to the [Troubleshooting](#troubleshooting) section for debugging tips.

+Image content allows including visual information in messages:

-If the hammer icon has shown up, you can now test your server by running the following commands in Claude for Desktop:

+```json theme={null}

+{

+ "type": "image",

+ "data": "base64-encoded-image-data",

+ "mimeType": "image/png"

+}

+```

-* What's the weather in Sacramento?

-* What are the active weather alerts in Texas?

+The image data **MUST** be base64-encoded and include a valid MIME type. This enables

+multi-modal interactions where visual context is important.

-

-

-

+#### Image Content

-If your server isn't being picked up by Claude for Desktop, proceed to the [Troubleshooting](#troubleshooting) section for debugging tips.

+Image content allows including visual information in messages:

-If the hammer icon has shown up, you can now test your server by running the following commands in Claude for Desktop:

+```json theme={null}

+{

+ "type": "image",

+ "data": "base64-encoded-image-data",

+ "mimeType": "image/png"

+}

+```

-* What's the weather in Sacramento?

-* What are the active weather alerts in Texas?

+The image data **MUST** be base64-encoded and include a valid MIME type. This enables

+multi-modal interactions where visual context is important.

-

-  -

+#### Audio Content

-

-

-

+#### Audio Content

-

-  -

+Audio content allows including audio information in messages:

-

-

+Audio content allows including audio information in messages:

- -

+## Protocol Messages

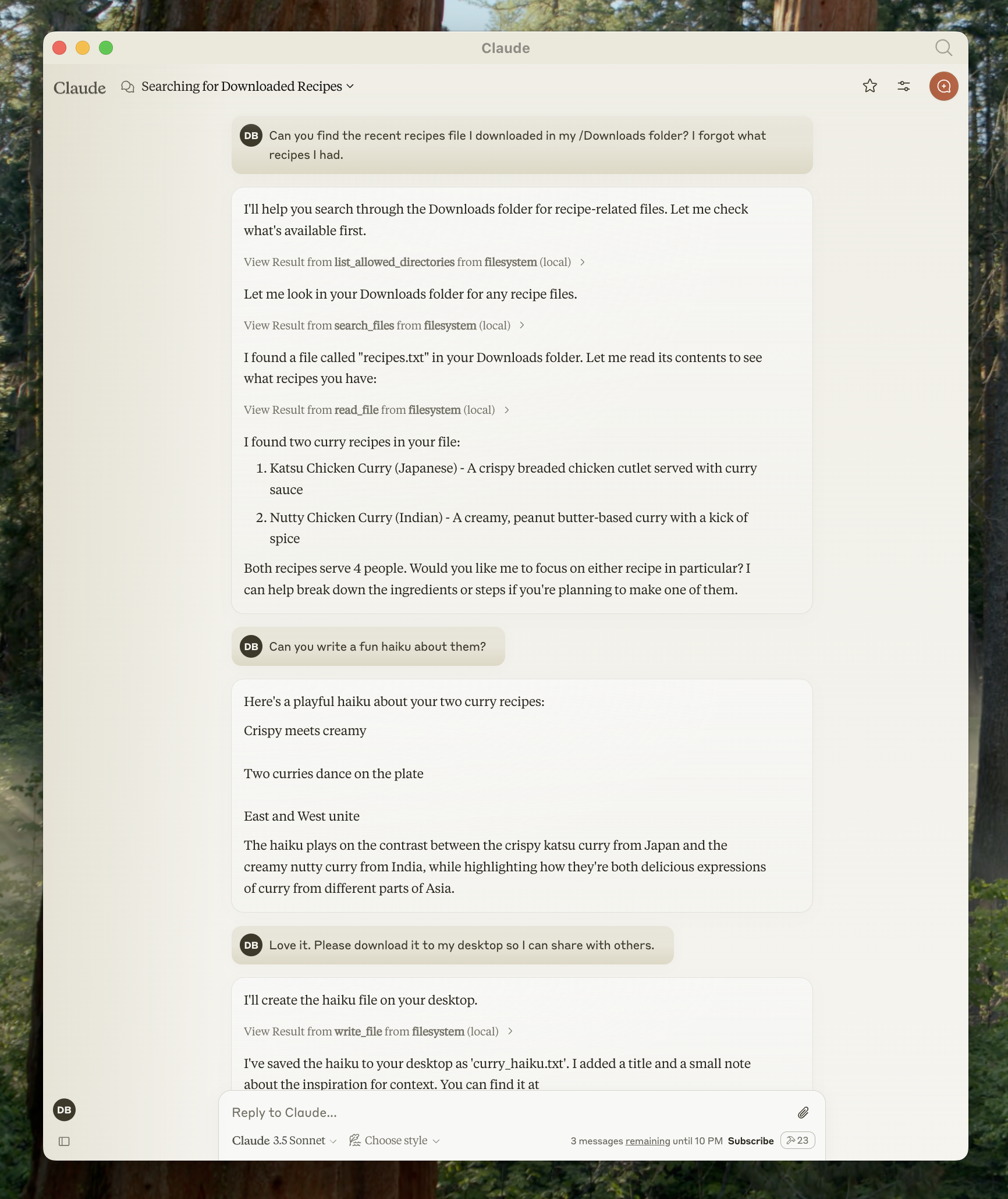

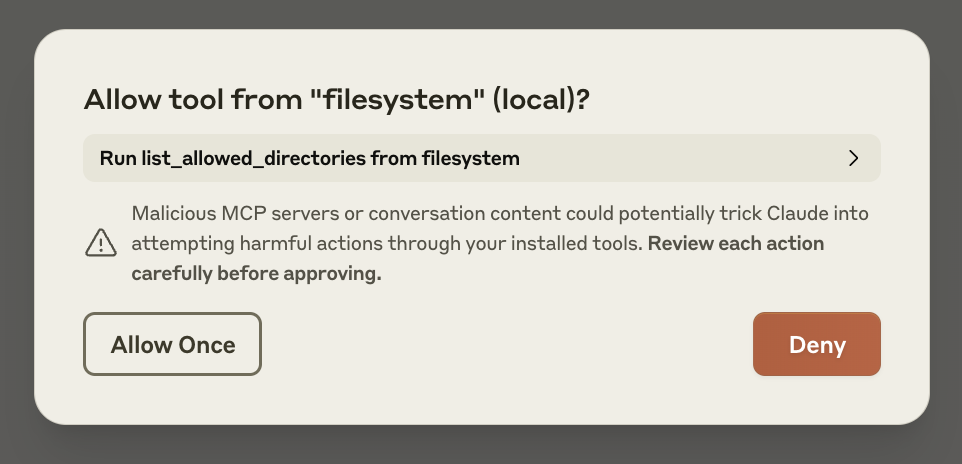

-Don't worry — it will ask you for your permission before executing these actions!

+### Listing Resources

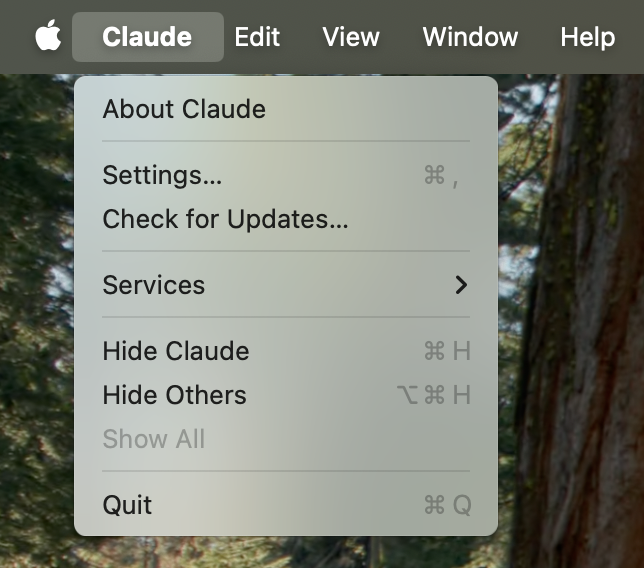

-## 1. Download Claude for Desktop

+To discover available resources, clients send a `resources/list` request. This operation

+supports [pagination](/specification/2025-11-25/server/utilities/pagination).

-Start by downloading [Claude for Desktop](https://claude.ai/download), choosing either macOS or Windows. (Linux is not yet supported for Claude for Desktop.)

+**Request:**

-Follow the installation instructions.

+```json theme={null}

+{

+ "jsonrpc": "2.0",

+ "id": 1,

+ "method": "resources/list",

+ "params": {

+ "cursor": "optional-cursor-value"

+ }

+}

+```

-If you already have Claude for Desktop, make sure it's on the latest version by clicking on the Claude menu on your computer and selecting "Check for Updates..."

+**Response:**

-

-

+## Protocol Messages

-Don't worry — it will ask you for your permission before executing these actions!

+### Listing Resources

-## 1. Download Claude for Desktop

+To discover available resources, clients send a `resources/list` request. This operation

+supports [pagination](/specification/2025-11-25/server/utilities/pagination).

-Start by downloading [Claude for Desktop](https://claude.ai/download), choosing either macOS or Windows. (Linux is not yet supported for Claude for Desktop.)

+**Request:**

-Follow the installation instructions.

+```json theme={null}

+{

+ "jsonrpc": "2.0",

+ "id": 1,

+ "method": "resources/list",

+ "params": {

+ "cursor": "optional-cursor-value"

+ }

+}

+```

-If you already have Claude for Desktop, make sure it's on the latest version by clicking on the Claude menu on your computer and selecting "Check for Updates..."

+**Response:**

- -

+**Response:**

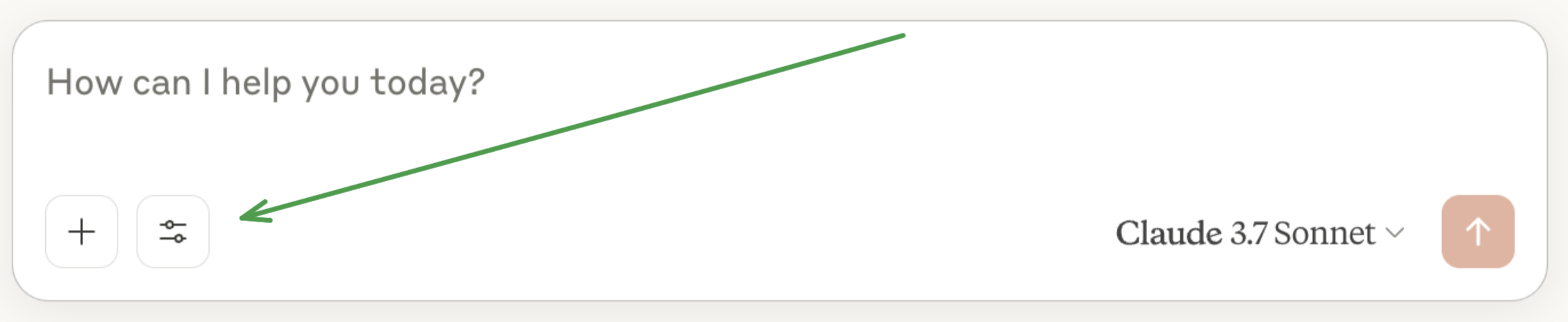

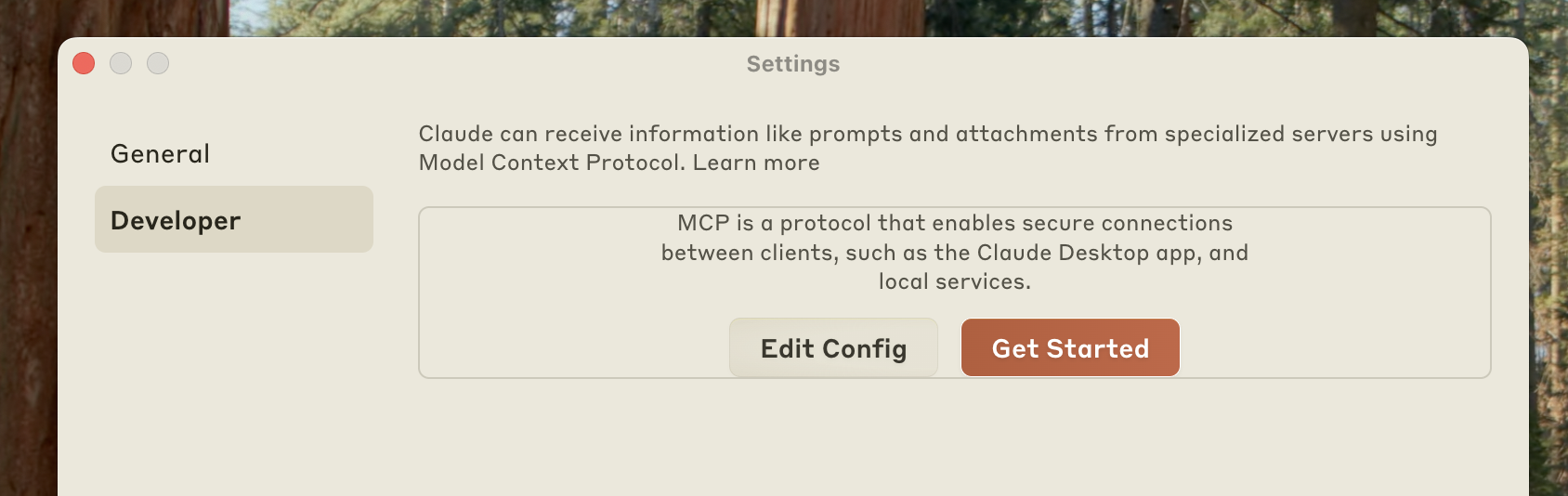

-Click on "Developer" in the left-hand bar of the Settings pane, and then click on "Edit Config":

+```json theme={null}

+{

+ "jsonrpc": "2.0",

+ "id": 2,

+ "result": {

+ "contents": [

+ {

+ "uri": "file:///project/src/main.rs",

+ "mimeType": "text/x-rust",

+ "text": "fn main() {\n println!(\"Hello world!\");\n}"

+ }

+ ]

+ }

+}

+```

-

-

-

+**Response:**

-Click on "Developer" in the left-hand bar of the Settings pane, and then click on "Edit Config":

+```json theme={null}

+{

+ "jsonrpc": "2.0",

+ "id": 2,

+ "result": {

+ "contents": [

+ {

+ "uri": "file:///project/src/main.rs",

+ "mimeType": "text/x-rust",

+ "text": "fn main() {\n println!(\"Hello world!\");\n}"

+ }

+ ]

+ }

+}

+```

-

-  -

+### Resource Templates

-This will create a configuration file at:

+Resource templates allow servers to expose parameterized resources using

+[URI templates](https://datatracker.ietf.org/doc/html/rfc6570). Arguments may be

+auto-completed through [the completion API](/specification/2025-11-25/server/utilities/completion).

-* macOS: `~/Library/Application Support/Claude/claude_desktop_config.json`

-* Windows: `%APPDATA%\Claude\claude_desktop_config.json`

+**Request:**

-if you don't already have one, and will display the file in your file system.

+```json theme={null}

+{

+ "jsonrpc": "2.0",

+ "id": 3,

+ "method": "resources/templates/list"

+}

+```

-Open up the configuration file in any text editor. Replace the file contents with this:

+**Response:**

-

-

+### Resource Templates

-This will create a configuration file at:

+Resource templates allow servers to expose parameterized resources using

+[URI templates](https://datatracker.ietf.org/doc/html/rfc6570). Arguments may be

+auto-completed through [the completion API](/specification/2025-11-25/server/utilities/completion).

-* macOS: `~/Library/Application Support/Claude/claude_desktop_config.json`

-* Windows: `%APPDATA%\Claude\claude_desktop_config.json`

+**Request:**

-if you don't already have one, and will display the file in your file system.

+```json theme={null}

+{

+ "jsonrpc": "2.0",

+ "id": 3,

+ "method": "resources/templates/list"

+}

+```

-Open up the configuration file in any text editor. Replace the file contents with this:

+**Response:**

- -

+ Note over Client,Server: Resource Access

+ Client->>Server: resources/read

+ Server-->>Client: Resource contents

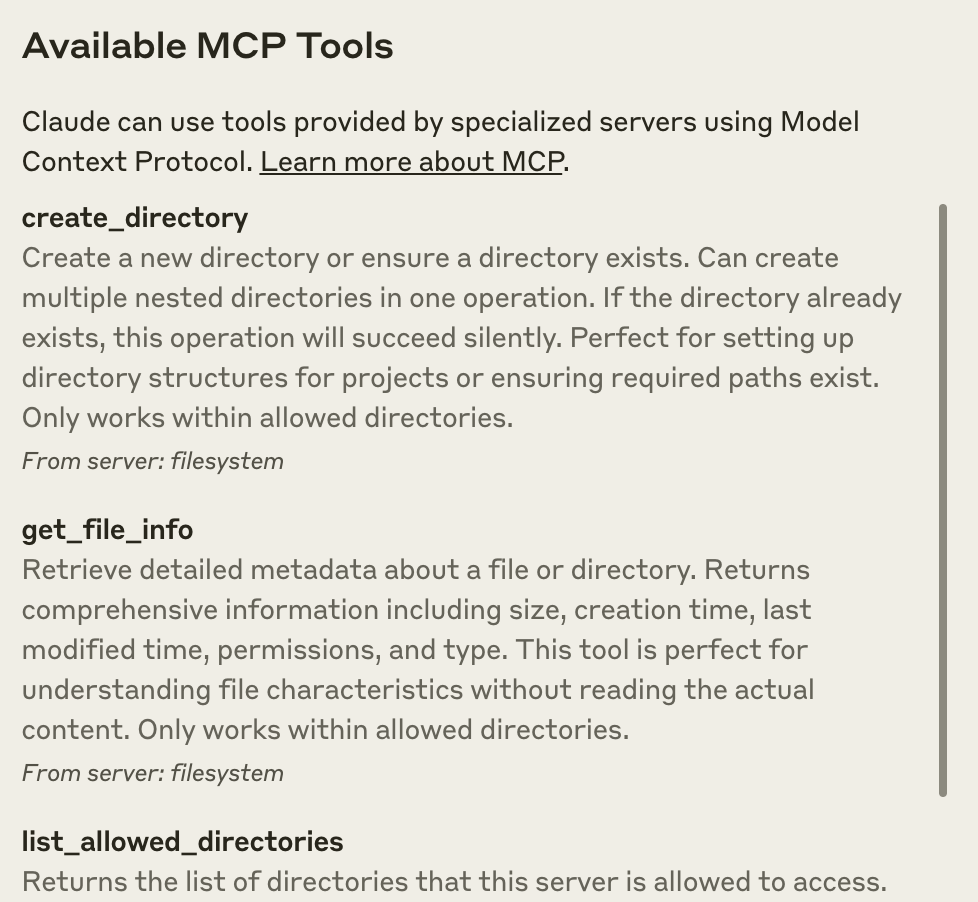

-After clicking on the hammer icon, you should see the tools that come with the Filesystem MCP Server:

+ Note over Client,Server: Subscriptions

+ Client->>Server: resources/subscribe

+ Server-->>Client: Subscription confirmed

-

-

-

+ Note over Client,Server: Resource Access

+ Client->>Server: resources/read

+ Server-->>Client: Resource contents

-After clicking on the hammer icon, you should see the tools that come with the Filesystem MCP Server:

+ Note over Client,Server: Subscriptions

+ Client->>Server: resources/subscribe

+ Server-->>Client: Subscription confirmed

-

-  -

+ Note over Client,Server: Updates

+ Server--)Client: notifications/resources/updated

+ Client->>Server: resources/read

+ Server-->>Client: Updated contents

+```

-If your server isn't being picked up by Claude for Desktop, proceed to the [Troubleshooting](#troubleshooting) section for debugging tips.

+## Data Types

-## 4. Try it out!

+### Resource

-You can now talk to Claude and ask it about your filesystem. It should know when to call the relevant tools.

+A resource definition includes:

-Things you might try asking Claude:

+* `uri`: Unique identifier for the resource

+* `name`: The name of the resource.

+* `title`: Optional human-readable name of the resource for display purposes.

+* `description`: Optional description

+* `icons`: Optional array of icons for display in user interfaces

+* `mimeType`: Optional MIME type

+* `size`: Optional size in bytes

-* Can you write a poem and save it to my desktop?

-* What are some work-related files in my downloads folder?

-* Can you take all the images on my desktop and move them to a new folder called "Images"?

+### Resource Contents

-As needed, Claude will call the relevant tools and seek your approval before taking an action:

+Resources can contain either text or binary data:

-

-

-

+ Note over Client,Server: Updates

+ Server--)Client: notifications/resources/updated

+ Client->>Server: resources/read

+ Server-->>Client: Updated contents

+```

-If your server isn't being picked up by Claude for Desktop, proceed to the [Troubleshooting](#troubleshooting) section for debugging tips.

+## Data Types

-## 4. Try it out!

+### Resource

-You can now talk to Claude and ask it about your filesystem. It should know when to call the relevant tools.

+A resource definition includes:

-Things you might try asking Claude:

+* `uri`: Unique identifier for the resource

+* `name`: The name of the resource.

+* `title`: Optional human-readable name of the resource for display purposes.

+* `description`: Optional description

+* `icons`: Optional array of icons for display in user interfaces

+* `mimeType`: Optional MIME type

+* `size`: Optional size in bytes

-* Can you write a poem and save it to my desktop?

-* What are some work-related files in my downloads folder?

-* Can you take all the images on my desktop and move them to a new folder called "Images"?

+### Resource Contents

-As needed, Claude will call the relevant tools and seek your approval before taking an action:

+Resources can contain either text or binary data:

-

-  -

+#### Text Content

-## Troubleshooting

+```json theme={null}

+{

+ "uri": "file:///example.txt",

+ "mimeType": "text/plain",

+ "text": "Resource content"

+}

+```

-

-

+#### Text Content

-## Troubleshooting

+```json theme={null}

+{

+ "uri": "file:///example.txt",

+ "mimeType": "text/plain",

+ "text": "Resource content"

+}

+```

-Creates WebFlux-based SSE server transport.

Requires the mcp-spring-webflux dependency.

Implements the MCP HTTP with SSE transport specification, providing:

+**Response:** -Creates WebMvc-based SSE server transport.

Requires the mcp-spring-webmvc dependency.

Implements the MCP HTTP with SSE transport specification, providing:

+| Type | Description | Example | +| -------------- | --------------------------- | --------------------------------------------------- | +| `ref/prompt` | References a prompt by name | `{"type": "ref/prompt", "name": "code_review"}` | +| `ref/resource` | References a resource URI | `{"type": "ref/resource", "uri": "file:///{path}"}` | -

- Creates a Servlet-based SSE server transport. It is included in the core mcp module.

- The HttpServletSseServerTransport can be used with any Servlet container.

- To use it with a Spring Web application, you can register it as a Servlet bean:

-

- Implements the MCP HTTP with SSE transport specification using the traditional Servlet API, providing: -

+## Message Flow -/sse) for server-to-client events